For the total of a quantity with n contributors, we define the true, unperturbed total as

\(Y=\sum_{i=1}^{n}y_i \tag*{}\)

where \(y_i\) represents the value of interest from contributor \(i\). We assume a scenario where contributor \(k\) is attempting to find the value of contributor \(j\) in a total where any single contributor \(j \ne k\) is a passive claimant perturbed with log-Laplace multiplicative perturbation. Assuming the perturbation is bias-corrected, the correction is defined as \(c=\frac{1-b^2}{e^\mu}\) where \(b\in(0,\frac{1}{2}\ )\) is the dispersion parameter of the log-Laplace distribution (Appendix A.2 Proposition 4). The published total will therefore have the form,

\(\hat{Y}=ce^{X_j}y_j+\sum_{i=1,\ i\neq j}^{n}y_i \tag*{}\)

where \(X_j \sim Laplace(\mu,b)\). If contributor \(k\) uses their value to attempt to learn contributor \(j\)’s value, the new total has the form,

\(\hat{Y}-y_k=ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i \tag*{}\)

To assess disclosure risk according to the p% rule, we define \(p\in(0,1)\) and test if \(\hat{Y}-y_k\) falls within p% of \(y_j\). The probability of this occurring can be formally be expressed as,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i\ <\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,\ y_n\right) \tag*{}\)

Proposition 1 (Probability of violating the p% rule when contributor \(j\) is perturbed with log-Laplace noise)

We begin by rearranging the inequality as follows,

\(\frac{1}{c}\left(1-p-\frac{\sum_{i=1,\ i\neq j,k}^{n}y_i}{y_j}\right)<\ e^{X_j}<\frac{1}{c}\left(1+p-\frac{\sum_{i=1,\ i\neq j,k}^{n}y_i}{y_j}\right) \tag*{}\)

Letting \( R=\frac{\sum_{i=1,\ i\neq j,k}^{n}y_i}{y_j}\), we transform the log-Laplace distribution into a Laplace distribution by taking the natural logarithm of the inequality,

\(\ln{\left(1-p-R\right)}-ln\left(c\right)<\ X_j<\ln{\left(1+p-R\right)}-ln(c) \tag*{}\)

This inequality is subject to conditions on \(R\) in order to be valid which alters the formula for the disclosure probability. If it is possible for \(X_j\) to be in this interval, then there is a non-zero probability of disclosure. These conditions are separated into cases outlined below.

Case 1: \(1-p>R\)

This is a situation where the lower bound of the p% interval (and hence, the upper bound) of the total is dominated by the contribution being attacked, this is a situation where there is a possible disclosure risk for \(y_j\). In this case, no adjustment is required for the interval above to be valid. The disclosure risk is given by,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,\ y_n\right)= \\ \ F_X\left(\ln{\left(1+p-R\right)-ln(c)}\right)-\ F_X\left(\ln{\left(1-p-R\right)}-\ln{\left(c\right)}\right)\ \tag*{}\)

where \(F_X(x)\) is the cumulative distribution function (cdf) of the random variable \(X_j\sim Laplace\left(\mu,b\right)\).

Case 2: \(1-p\le R\) and \(1+p>R\)

This situation depicts a case where the lower bound of the p% interval is dominated by the other contributors, meaning that approximating \(y_j\) at this lower bound is not possible. The upper bound does still dominate over the other contributors though, meaning that an approximation up to p% higher than the true value is still possible. This case requires the bounds to be changed to,

\(-\infty<\ X_j<\ln{\left(1+p-R\right)-\ln{\left(c\right)}} \tag*{}\)

And the disclosure risk is given by,

\(P\left(\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |p,b,\ \mu,\ y_1,\ldots,\ y_n\right)=\ F_X\left(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\right) \tag*{}\)

Case 3: \(1-p\le R\) and \(1+p\le R\)

This is a situation where both extremes of the p% interval are dominated by other contributors. This means the disclosure risk is 0 without other prior knowledge of the contributor,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,\ y_1,\ldots,\ y_n\right)=\ 0 \tag*{}\)

Proof

We use the cumulative density function (cdf) for the Laplace distribution (proof not shown) given by,

\(F_X\left(x\right)=\ \int_{-\infty}^{x}{f_X\left(t\right)\ dt}=\frac{1}{2b}\int_{-\infty}^{x}{\exp{\left(-\frac{\left|t-\mu\right|}{b}\right)}\ dt} \tag*{}\)

which can be represented in piecewise form as,

\(F_X\left(x\right)= \begin{cases} \frac{1}{2}\exp{\left(\frac{x-\mu}{b}\right)}\ \ &x<\mu \\ 1-\frac{1}{2}\exp{\left(-\frac{x-\mu}{b}\right)} \ \ &x\ge\mu \end{cases} \tag*{}\)

Since the p% interval is bounded differently due to the arguments of the natural logarithms of the transformed interval, we will address each case separately.

Case 1: \(1-p>R\)

The entire transformed interval is valid in this case so no additional manipulation is required.

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,\ y_n\right)= \\ P\left(\ln{\left(1-p-R\right)}-\ln{\left(c\right)}<\ X_j<\ln{\left(1+p-R\right)}-\ln{\left(c\right)}|\ p,b,\mu,y_1,\ldots,\ y_n\right)\)

Apply the definition of the probability on a continuous interval in terms of the probability density function.

\(\int_{\ln{\left(1-p-R\right)-\ln{\left(c\right)}}}^{\ln{\left(1+p-R\right)}-\ln{\left(c\right)}}{f_X\left(t\right)\ dt}\)

Since \(f_X\left(x\right) \) is a valid pdf on the interval \( x\in(-\infty,\ \infty)\), we can apply the fundamental theorem of calculus to the integral,

\(= \lim_{v \ \to \ -\infty}{\int_{v}^{\ln{\left(1+p-R\right)-\ln{\left(c\right)}}}{f_X\left(t\right)\ dt}-\ \int_{v}^{\ln{\left(1-p-R\right)-\ln{\left(c\right)}}}{f_X\left(t\right)\ dt}} \)

Apply the definition of the cdf, \(F_X(x)\).

\(=F_X\left(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\right)-\ F_X\left(\ln{\left(1-p-R\right)}-\ln{\left(c\right)}\right)\)

Therefore,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,\ y_1,\ldots,\ y_n\right)= \\ \ F_X\left(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\right)-\ F_X\left(\ln{\left(1-p-R\right)}-\ln{\left(c\right)}\right) \tag*{}\)

Case 2: \(1-p\le R\) and \(1+p>R\)

As previously explained, since the lower bound of the interval is no longer valid, disclosure is only possible on the support of the pdf, which is \( x\in(-\infty,\ \infty)\), meaning that we can adjust the lower bound of the interval to the lower bound of the pdf.

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,\ y_n\right)= \\ P\left(-\infty<\ X_j<\ln{\left(\left(1+p\right)y_j-R\right)-\ln{\left(c\right)}}\ |\ p,b,\mu,y_1,\ldots,\ y_n\right) \)

Express the probability using the pdf.

\(=\lim_{v\ \to \ -\infty}\int_{v}^{\ln{\left(1+p-R\right)}-\ln{\left(c\right)}}{f_X\left(t\right)\ dt}\)

Apply the definition of the cdf.

\(=F_X\left(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\right)\)

Therefore,

\(P\left(\left(1-p\right)y_j<ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,\ y_n\right)=\ F_X\left(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\right) \tag*{}\)

Case 3: \(1-p\le R\) and \(1+p\le R\)

Since the interval is not valid in this case, there is no disclosure risk. Therefore,

\(P\left(\left(1-p\right)y_j<ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,\ y_n\right)=\ 0 \tag*{}\) ∎

B.1.2 Corollary (Specific results for \(\mu=0\))

Further simplifications to the cases shown previously when \(\mu=0\) are possible and will be shown below. In these cases, we assume \(X_j\sim Laplace(0,b)\).

Case 1: \(1-p>R\)

This can be split into the following 3 cases depending upon if the upper and lower bounds of the cdf fall below or above \(\mu=0\). These cases are summarised below,

Case 1.1: \(\ln{\left(1-p-R\right)}-\ln(c)<0 \) and \(\ln{\left(1+p-R\right)}-\ln(c)<0\) while \(1-p>R\) remains TRUE

Lower Bound \(\ln{\left(1-p-R\right)}-\ln(c)<0\) Upper Bound \(\ln{\left(1+p-R\right)}-\ln(c)<0\)

\(\frac{1-p-R}{c}<1\) \(\frac{1+p-R}{c}<1\)

\(1-p-R<c\) \(1+p-R<c\)

\(1-p-c<R\) \(1+p-c<R\)

Since \( p\in(0,1)\), both conditions on the bounds are TRUE if \(1+p-c<R\). It is also possible for \(1-p>R\) to simultaneously be TRUE, as long as \(c\) is sufficiently large. Therefore we can simplify the Case 1 equation,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,y_n\right)=\\F_X\left(\ln{\left(\frac{1+p-R}{c}\right)}\right)-\ F_X\left(\ln{\left(\frac{1-p-R}{c}\right)}\right)\)

\(=\frac{1}{2}\exp{\left(\frac{1}{b}\ln{\left(\frac{1+p-R}{c}\right)}\right)}-\frac{1}{2}\exp{\left(\frac{1}{b}\ln{\left(\frac{1-p-R}{c}\right)}\right)}\)

\(=\frac{1}{2}\left[\left(\frac{1+p-R}{c}\right)^\frac{1}{b}-\left(\frac{1-p-R}{c}\right)^\frac{1}{b}\right],\ if\ 1+p-c<R<1-p\)

Case 1.2: \(\ln{\left(1-p-R\right)}-\ln(c)<0\) and \(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\geq0\) while \(1-p>R\) remains TRUE

Lower Bound \(\ln{\left(1-p-R\right)}-\ln(c)<0\) Upper Bound \(\ln{\left(1+p-R\right)}-\ln{\left(c\right)}\geq0\)

\(\frac{1-p-R}{c}<1\) \(\frac{1+p-R}{c}\geq1\)

\(1-p-R<c\) \(1+p-R\geq c\)

\(1-p-c<R\) \(1+p-c\geq R\)

Since \(p\in(0,1)\), both conditions on the bounds are \(1-p-c<R\le1+p-c\). For \(1-p>R\) to simultaneously be TRUE, we can modify the condition to be \( 1-p-c<R\le min(1+p-c,1-p)\). The Case 1 expression then becomes,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,y_n\right)=\\F_X\left(\ln{\left(\frac{1+p-R}{c}\right)}\right)-\ F_X\left(\ln{\left(\frac{1-p-R}{c}\right)}\right) \\ =1-\frac{1}{2}\exp{\left(-\frac{1}{b}\ln{\left(\frac{1+p-R}{c}\right)}\right)}-\frac{1}{2}\exp{\left(\frac{1}{b}\ln{\left(\frac{1-p-R}{c}\right)}\right)} \\ =1-\frac{1}{2}\left[\left(\frac{1+p-R}{c}\right)^{-\frac{1}{b}}+\left(\frac{1-p-R}{c}\right)^\frac{1}{b}\right],\ if\ 1-p-c<R\le min(1+p-c,1-p)\)

Case 1.3: \(\ln{\left(1-p-R\right)}-\ln(c)\geq0 \) and \(\ln{\left(1+p-R\right)}-\ln(c)\geq0\) while \(1-p>R \) remains TRUE

Lower Bound \(\ln{\left(1-p-R\right)}-\ln(c)\geq0 \) Upper Bound \(\ln{\left(1+p-R\right)}-\ln(c)\geq0\)

\(\frac{1-p-R}{c}\geq1\) \(\frac{1+p-R}{c}\geq1\)

\(1-p-R\geq c\) \(1+p-R\geq c\)

\(1-p-c\geq R\) \(1+p-c\geq R\)

Since \(p\in(0,1)\), both conditions on the bounds are satisfied if \(1-p-c\geq R\). In this instance, \(1-p>R\) is automatically TRUE as well so the Case 1 expression becomes,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,y_n\right)=\\F_X\left(\ln{\left(\frac{1+p-R}{c}\right)}\right)-\ F_X\left(\ln{\left(\frac{1-p-R}{c}\right)}\right) \\ =1-\frac{1}{2}\exp{\left(-\frac{1}{b}\ln{\left(\frac{1+p-R}{c}\right)}\ \right)}-1+\frac{1}{2}\exp{\left(-\frac{1}{b}\ln{\left(\frac{1-p-R}{c}\right)}\right)} \\ =\frac{1}{2}\left[\left(\frac{1-p-R}{c}\right)^{-\frac{1}{b}}-\left(\frac{1+p-R}{c}\right)^{-\frac{1}{b}}\right],\ if\ 1-p-c\geq R\)

Case 2: \(1-p \le R\) and \(1+p>R\)

This case can be split into 2 additional cases depending on if the upper bound is positive or negative (since the lower bound is \(-\infty\)). These cases are summarised below:

Case 2.1: \(\ln{\left(1+p-R\right)}-\ln(c)<0 \)while \(1-p\le R\) and \(1+p>R\) remain TRUE.

We simplify the upper bound to be \(1+p-c<R\) as in Case 1.1. Since \(p\in(0,1)\), \(1+p>R\) will always be the upper bound for this inequality to hold, while \(1+p-c \) or \(1-p\) will act as the lower bound on \(R\), whichever is larger. Therefore Case 2 simplifies to,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,y_n\right)=\\F_X\left(\ln{\left(\frac{1+p-R}{c}\right)}\right)\\ =1-\frac{1}{2}\exp{\left(-\frac{1}{b}\ln{\left(1+p-R\right)}\right)} \\ =1-\frac{1}{2}\left(\frac{1+p-R}{c}\right)^{-\frac{1}{b}},\ if\ 1-p\le R\le1+p-c\)

Case 3: \(1+p \le R\)

Since this case occurs in a situation where there is no disclosure risk, there is no further simplification to make. Therefore,

\( P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots,y_n\right)=0\)

Combining all cases: Equation b.1.3.

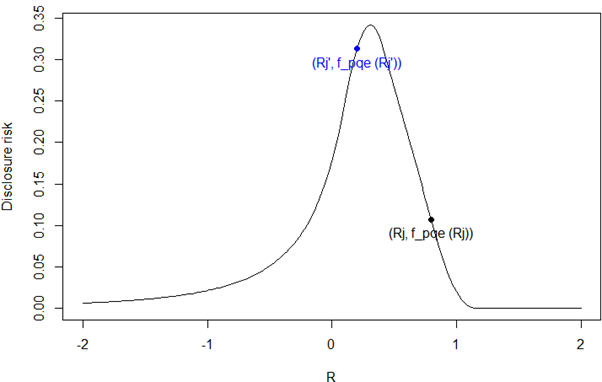

In this way, all cases can be defined in terms of \(R\) as the following piecewise function,

\(P\left(\left(1-p\right)y_j<\ ce^{X_j}y_j+\sum_{i=1,\ i\neq j,k}^{n}y_i<\left(1+p\right)y_j\ |\ p,b,\mu,y_1,\ldots y_n\right)= \\ \begin{cases} \frac{1}{2}\left[\left(\frac{1-p-R}{c}\right)^{-\frac{1}{b}}-\left(\frac{1+p-R}{c}\right)^{-\frac{1}{b}}\right], \ \ if\ \ R\le1-p-c\ \\ 1-\frac{1}{2}\left[\left(\frac{1+p-R}{c}\right)^{-\frac{1}{b}}+\left(\frac{1-p-R}{c}\right)^\frac{1}{b}\right],\ \ if\ 1-p-c<R\le\min{\left(1-p,1+p-c\right)} \\ \frac{1}{2}\left[\left(\frac{1+p-R}{c}\right)^\frac{1}{b}-\left(\frac{1-p-R}{c}\right)^\frac{1}{b}\right],\ \ {if\ \ 1+p-c<R<1-p} \\ 1-\frac{1}{2}\left(\frac{1+p-R}{c}\right)^{-\frac{1}{b}},\ \ {if\ \ \ 1-p\le R\le1+p-c} \\ \frac{1}{2}\left(\frac{1+p-R}{c}\right)^\frac{1}{b},\ \ if\max{\left(1+p-c,1-p\right)}\le R<1+p \\ 0,\ \ if\ R\geq1+p \end{cases}\)

Note that the third and fourth case cannot occur at the same time for a particular value of \(p\in(0,1)\).